It was 1956 when American mathematician John Mccarthy coined the term Artificial Intelligence (AI) at Dartmouth College, New Hampshire, USA.

On that occasion this new discipline was programmatically founded and, although the conference did not go as the organizers expected (Mccarthy above all), this had a considerable historical importance. The conference between the academics who participated was stimulating and it was from them (and their students) that the main successes in this field were obtained.

It was after the Dartmouth Conference that AI became, indeed, a field of intellectual research and, as controversial as it was, from that moment on it began to progress more and more rapidly.

Our journey through the history of Artificial Intelligence continue.

The enthusiasm and successes of the first golden age of the AI (1956-1974)

The programs developed in the years following the “Dartmouth Summer Research Project on Artificial Intelligence” were, for most people of the time, simply surprising.

Computers, faster, cheaper and more accessible, could store more information and were able to solve algebra problems, demonstrate geometry theorems, and even learn to speak a language.

At that time not so many people would have believed that such “intelligent” behavior by machines was possible, but from 1957 to 1974 there was a “flowering” of Artificial Intelligence.

Researchers were highly optimistic and some government agencies such as DARPA (Defense Advanced Research Projects Agency), the institution that deals with the development of new technologies for military use, invested tens millions of dollars in AI projects at several universities. It must be considered that, in the middle of the Cold War, having a machine able to transcribe and translate the language (Russian in this case) would have been a considerable advantage for the American government.

Scientific research focused on Artificial Intelligence, between the late 1950s and the 1960s, followed different paths and developments.

Reasoning and problem-solving

Many of the first AI programs used an algorithm to achieve a goal (winning a chess game or demonstrating a theorem), proceeding by deduction according to a paradigm called “Reasoning as search”.

In 1957 Allen Newell and Herbert Simon tried to develop a general version of this algorithm by producing the General Problem Solver (GPS), a mechanism inspired by human reasoning. Through a logical process that started from certain premises and rules, and approached the problem-solving strategies, GPS could act and manipulate objects within the representation of a room and, for example, reach an object resting on a table by stacking two chairs.

The elaboration of natural language

An important goal of AI researchers was to enable computers to communicate in natural languages such as English.

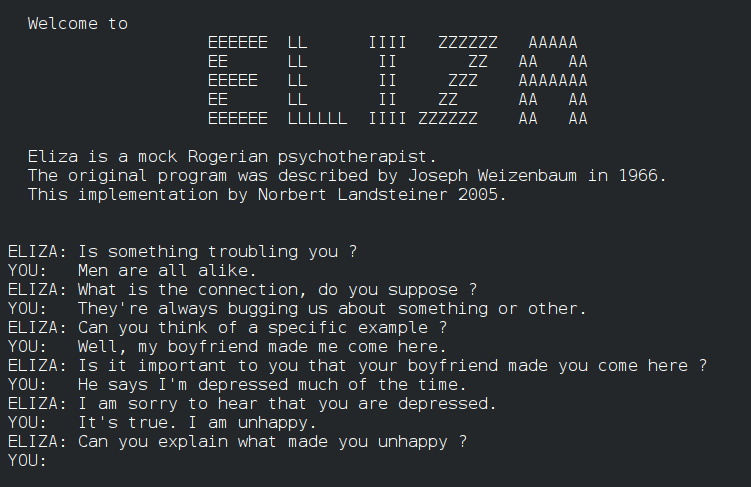

Between 1964 and 1966 Joseph Weizenbaum created ELIZA, a pioneering application of natural language processing. The machine, a parody of a Rogersian therapist, was able to simulate realistic conversations with humans using pattern matching rules. Basically, simple keywords were analyzed and replaced by pre-packaged phrases, answering to the patient with questions obtained from the reformulation of statements of the patient himself. So, for example, to the phrase “My head hurts” the program could reply with “Why do you say your head hurts?” or the answer to “My mother hates me” could be “Who else in your family hates you?”

Depending on the sentences that the user puts in ELIZA, the illusion of a human interlocutor was unmasked or could continue for several exchanges of words. Sometimes it was so convincing that some people were so convinced to communicate with a human being that they could continue for several minutes.

ELIZA is remembered not only as the first chatterbot (or chatbot) but also as a fundamental step in the history of AI: it was the first time that human-machine interaction developed with the aim of creating an illusory conversation between human beings.

Robotics

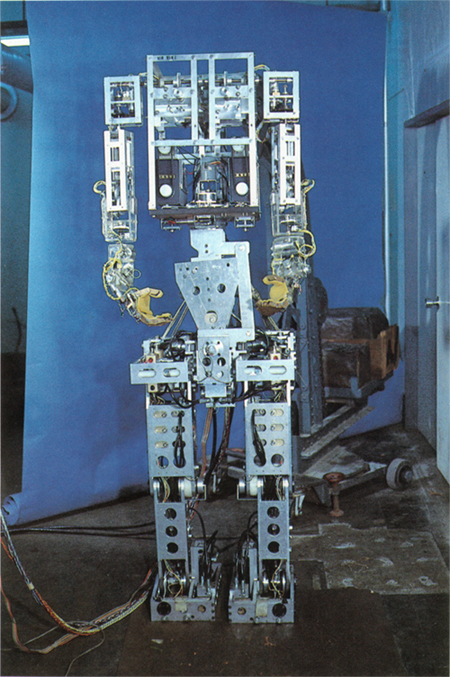

In 1972, the first anthropomorphic robot called WABOT-1 was built at Waseda University in Japan. Its characteristics included mobility of the limbs (it could walk with the lower limbs and grab and carry objects with the upper ones), ability to see (through external receivers it was able to measure the distance and direction of the objects) and ability to converse (he could communicate with a person in Japanese).

The first winter of the AI (1974-1980)

Many scientists believed that already in the ’80s they would build fully intelligent machines capable of performing any task a man could do. Scientists’ expectations and hopes, however, turned out to be too ambitious. Right in the areas that seemed simpler, such as automated translation or reproduction of natural language, AI encountered the greatest difficulties.

It was thought that automated translations could be done through a simple syntactic manipulation, which turned out to be wrong. The method employed often led to grotesque results, such as the translation from English to Russian that from “The spirit is Willing, but the flesh is weak” (the spirit is strong, but the flesh is weak) became “The whiskey is strong, but the meat is rotten” (the whiskey is strong, but the meat has gone bad).

The failure of these initial attempts was established by the ALPAC (Automatic Language Processing Advisory Committee) report, a report to the US government in 1966 which established a cut in funding for many AI research projects. DARPA, which had invested heavily in AI research in hopes that it would be possible to translate scientific articles from Russian to English, abandoned for lack of results.

Other complications were related to the limited computing power of the machines. The lack of computational power of the computers of that time did not allow to store enough information and amount of data, and at the same time to process them quickly.

Even the best programs developed were only able to handle trivial problems, as the amount of processing power needed to solve more complex problems went far beyond what computers could do at the time. For example, one of the first Artificial Intelligence systems that analyzed the English language was able to handle a vocabulary of only 20 words, because it could not store others in its memory.

The enthusiasm of the first years began to decrease and the dream of building “intelligent” machines was momentarily put aside. It entered the so-called first winter of Artificial Intelligence, in which there was a great shortage of government funding for AI research and the general interest in this ambitious field reduced.

In the 80s, however, a new way of doing Artificial Intelligence caught on and became the focus of research on AI, establishing the exit from this momentary standstill: the Expert Systems were born.